Exo-Machina Hack 2.0 + Redeem Codes

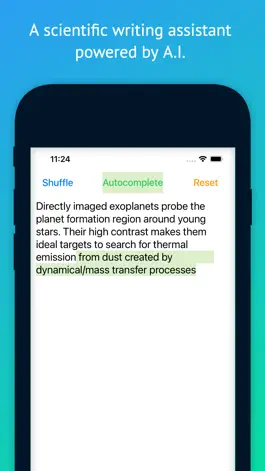

A.I. powered writing assistant

Developer: Kyle Pearson

Category: Productivity

Price: Free

Version: 2.0

ID: ai.exomachina.pearsonkyle

Screenshots

Description

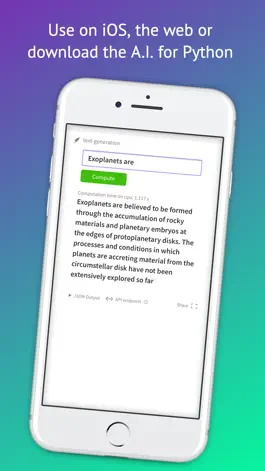

A deep language model, GPT-2, is trained on scientific manuscripts from NASA's Astrophysical Data System and the ArXiv. The language model is used to generate text and explore the relationships within the scientific literature. Explore the source code on GitHub!

Version history

2.0

2021-01-11

New Features:

- Multiple Choice

- Secret mode

- Multiple Choice

- Secret mode

1.0

2020-12-04

Ways to hack Exo-Machina

- Redeem codes (Get the Redeem codes)

Download hacked APK

Download Exo-Machina MOD APK

Request a Hack

Ratings

2 out of 5

4 Ratings

Reviews

congressman roby,

what

cant close out the keyboard to generate text??