MuSA_RT Hack 2.0.3 + Redeem Codes

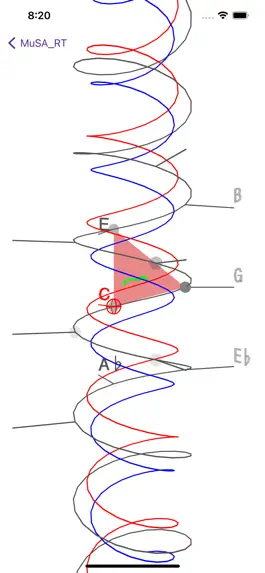

Tonality 3D visualization + AR

Developer: Alexandre Francois

Category: Music

Price: Free

Version: 2.0.3

ID: com.arjf.musart

Screenshots

Description

MuSA_RT animates a visual representation of tonal patterns - pitches, chords, key - in music as it is being performed.

MuSA_RT applies music analysis algorithms rooted in Elaine Chew's Spiral Array model of tonality, which also provides the 3D geometry for the visualization space.

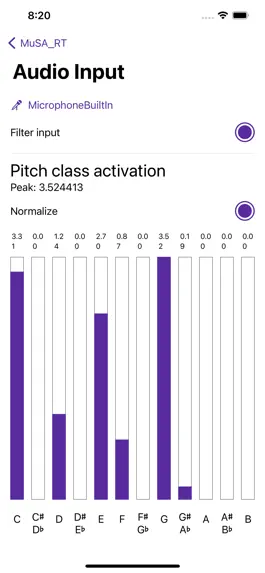

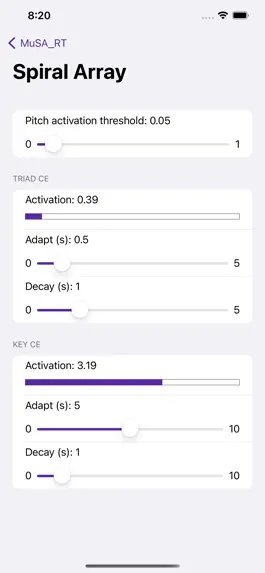

MuSA_RT interprets the audio signal from a microphone to determine pitch names, maintain shortterm and longterm tonal context trackers, each a Center of Effect (CE), and compute the closest triads (3-note chords) and keys as the music unfolds in performance.

MuSA_RT presents a graphical representations of these tonal entities in context, smoothly rotating the virtual camera to provide an unobstructed view of the current information.

MuSA_RT also offers an experimental immersive augmented reality experience where supported.

MuSA_RT applies music analysis algorithms rooted in Elaine Chew's Spiral Array model of tonality, which also provides the 3D geometry for the visualization space.

MuSA_RT interprets the audio signal from a microphone to determine pitch names, maintain shortterm and longterm tonal context trackers, each a Center of Effect (CE), and compute the closest triads (3-note chords) and keys as the music unfolds in performance.

MuSA_RT presents a graphical representations of these tonal entities in context, smoothly rotating the virtual camera to provide an unobstructed view of the current information.

MuSA_RT also offers an experimental immersive augmented reality experience where supported.

Version history

2.0.3

2021-01-27

Bug fixes and UI tweaks.

2.0.1

2021-01-17

Bug fixes and UI tweaks.

2.0.0

2021-01-06

Ways to hack MuSA_RT

- Redeem codes (Get the Redeem codes)

Download hacked APK

Download MuSA_RT MOD APK

Request a Hack

Ratings

5 out of 5

1 Ratings

Reviews

kaliko,

Great Idea But Cannot Use

I love the idea of this app, and it looks visually appealing. The only thing is that it does not accept input from any source other than MIDI. I don't have any way (that I know of) to make the app "listen" to mic input, either internal or external, on my Mac. Alexandre, I hope you might consider adding the ability for it to listen to mic inputs also.