NeuLab Hack 1.6 + Redeem Codes

A neural network laboratory

Developer: Tim Dyes

Category: Utilities

Price: Free

Version: 1.6

ID: com.TimDyes.NeuLab

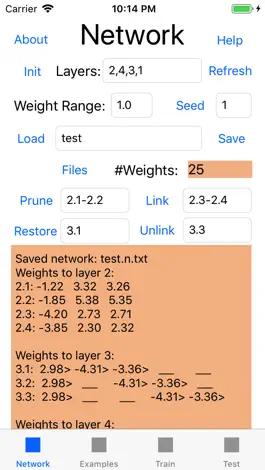

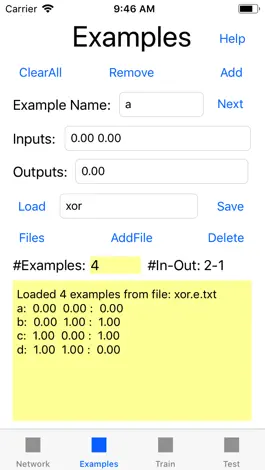

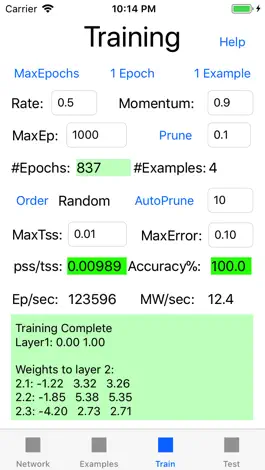

Screenshots

Description

NeuLab is a complete laboratory for learning about neural networks through hands on usage.

* Each screen has a Help button to give usage help for all controls on that screen.

* Configure, train, test, and optimize your network.

* Use AutoPrune to increase network accuracy.

* Use Prune and Link to build convolution layers.

* Define the input-output examples used for training and testing. Use NeuLab's built in editor or define these externally for import.

* Save and export your trained networks for study and reuse.

* Save and export your training data and test results.

* Step the network training to see how internal weights change with each presentation of training data.

* Step the network testing to see how internal nodes activate in response to the inputs and produce the network outputs.

Background: Neural networks process data via highly interconnected layers of simple processing nodes. These networks are being employed across all areas of pattern recognition and classification: handwritten character recognition, voice recognition, face detection/recognition, image classification, biometric security, credit risk assessment, financial markets analysis, sports analysis, language processing, game playing, the list goes on and on. A neural net learns by observation and doesn’t need problem specific rules or algorithms programmed in. The key factors for success are the network architecture, the training parameters, and most importantly the training data representation and the data examples themselves.

NeuLab is primarily an educational app to give you familiarity with how a neural network operates and to see its inner workings. You configure the network and provide the example data for training and testing the network. Your trained network hopefully will have learned the relationships necessary for good results when presented with your test data. Testing the network is critical to assessing its usefulness on as yet unseen data. Examining the test failures will give you insight into how the network operates, the importance of broad representation in the training data, and the importance of the data representation itself.

NeuLab lets you define a network up to 5 layers deep with up to 100 nodes per layer.

The networks here are feed forward where signals propagate from an Input layer of nodes through one or more hidden layers and finally to an output layer. Each layer is initially fully connected to the next layer (i.e. each node in one layer is connected to every node in the next layer). Each layer includes a bias node also fully connected to the next layer. Non-input nodes use a sigmoid activation function with outputs constrained to 0.0-1.0. Example data values are similarly constrained and should be scaled if needed.

A network is trained by multiple presentations of the training examples. The network learns by adjusting its internal connection weights until the outputs produced are sufficiently close to those given in the training examples. The learning algorithm is gradient descent backpropagation using the generalized delta rule as described in the seminal 1986 PDP books by Rumelhart, McClelland, et al.

You can vary network topology, initialization, training parameters, and the training data to see how training speed and training success is affected.

Once trained, network accuracy can be tested using examples not included in the training set. If test results are not good enough, more experimentation is needed. Perhaps more training data is needed. Perhaps the data representation is a problem. Perhaps pruning will help. Perhaps the input data is missing a key attribute.

You will develop some understanding for how these networks work, to what uses they can be employed, and how to solve training and usage difficulties that may arise.

Please send problems, questions, requests, or comments to me at [email protected]. See the case study and the FAQ there as well.

Enjoy! - Tim Dyes

* Each screen has a Help button to give usage help for all controls on that screen.

* Configure, train, test, and optimize your network.

* Use AutoPrune to increase network accuracy.

* Use Prune and Link to build convolution layers.

* Define the input-output examples used for training and testing. Use NeuLab's built in editor or define these externally for import.

* Save and export your trained networks for study and reuse.

* Save and export your training data and test results.

* Step the network training to see how internal weights change with each presentation of training data.

* Step the network testing to see how internal nodes activate in response to the inputs and produce the network outputs.

Background: Neural networks process data via highly interconnected layers of simple processing nodes. These networks are being employed across all areas of pattern recognition and classification: handwritten character recognition, voice recognition, face detection/recognition, image classification, biometric security, credit risk assessment, financial markets analysis, sports analysis, language processing, game playing, the list goes on and on. A neural net learns by observation and doesn’t need problem specific rules or algorithms programmed in. The key factors for success are the network architecture, the training parameters, and most importantly the training data representation and the data examples themselves.

NeuLab is primarily an educational app to give you familiarity with how a neural network operates and to see its inner workings. You configure the network and provide the example data for training and testing the network. Your trained network hopefully will have learned the relationships necessary for good results when presented with your test data. Testing the network is critical to assessing its usefulness on as yet unseen data. Examining the test failures will give you insight into how the network operates, the importance of broad representation in the training data, and the importance of the data representation itself.

NeuLab lets you define a network up to 5 layers deep with up to 100 nodes per layer.

The networks here are feed forward where signals propagate from an Input layer of nodes through one or more hidden layers and finally to an output layer. Each layer is initially fully connected to the next layer (i.e. each node in one layer is connected to every node in the next layer). Each layer includes a bias node also fully connected to the next layer. Non-input nodes use a sigmoid activation function with outputs constrained to 0.0-1.0. Example data values are similarly constrained and should be scaled if needed.

A network is trained by multiple presentations of the training examples. The network learns by adjusting its internal connection weights until the outputs produced are sufficiently close to those given in the training examples. The learning algorithm is gradient descent backpropagation using the generalized delta rule as described in the seminal 1986 PDP books by Rumelhart, McClelland, et al.

You can vary network topology, initialization, training parameters, and the training data to see how training speed and training success is affected.

Once trained, network accuracy can be tested using examples not included in the training set. If test results are not good enough, more experimentation is needed. Perhaps more training data is needed. Perhaps the data representation is a problem. Perhaps pruning will help. Perhaps the input data is missing a key attribute.

You will develop some understanding for how these networks work, to what uses they can be employed, and how to solve training and usage difficulties that may arise.

Please send problems, questions, requests, or comments to me at [email protected]. See the case study and the FAQ there as well.

Enjoy! - Tim Dyes

Version history

1.6

2018-03-22

This app has been updated by Apple to display the Apple Watch app icon.

A few new features for Network configuration.

1. Added a Link button. Link groups connections together.so that they share the same weights. Link also restores any pruned connections involved so that you don't have to restore them separately. During training the weights for each group are updated to the group average. (Hint - use Prune and Link to create convolution layers)

2. Added an Unlink button to remove a linked grouping.

3. Added the Seed button to re-initialize weights to new random values without disturbing the network topology. Pruned weights and linkages are not disturbed (whereas Init clears all linkages and pruning).

4. Updated a few error messages.

A few new features for Network configuration.

1. Added a Link button. Link groups connections together.so that they share the same weights. Link also restores any pruned connections involved so that you don't have to restore them separately. During training the weights for each group are updated to the group average. (Hint - use Prune and Link to create convolution layers)

2. Added an Unlink button to remove a linked grouping.

3. Added the Seed button to re-initialize weights to new random values without disturbing the network topology. Pruned weights and linkages are not disturbed (whereas Init clears all linkages and pruning).

4. Updated a few error messages.

1.5

2018-02-27

Giving you more design control of the network!

1. Adds controls to the Network screen that let you selectively prune/restore network connections. Thus you can constrain the network as desired to limit a node's view of the prior layer. This lets you assign a purpose to a given node or set of nodes, and makes the resulting network easier to analyze.

2. Pruned connections/weights are now shown as "___" instead of 0.00, both on screen and in saved files. This makes a pruned network easier to view and analyze.

1. Adds controls to the Network screen that let you selectively prune/restore network connections. Thus you can constrain the network as desired to limit a node's view of the prior layer. This lets you assign a purpose to a given node or set of nodes, and makes the resulting network easier to analyze.

2. Pruned connections/weights are now shown as "___" instead of 0.00, both on screen and in saved files. This makes a pruned network easier to view and analyze.

1.4

2018-02-13

Color coded a few fields to indicate training/testing success or failure.

a. Training screen - pss/tss field turns green if the tss for the last epoch trained is less than or equal to the MaxTss target. Turns yellow if above.

b. Training screen - Accuracy field turns green if the Accuracy of the last example/epoch trained is 100%. Turns yellow if < 100%.

c. Testing screen - Accuracy field turns green if the Accuracy of the last example/epoch tested is 100%. Turns yellow if < 100%.

a. Training screen - pss/tss field turns green if the tss for the last epoch trained is less than or equal to the MaxTss target. Turns yellow if above.

b. Training screen - Accuracy field turns green if the Accuracy of the last example/epoch trained is 100%. Turns yellow if < 100%.

c. Testing screen - Accuracy field turns green if the Accuracy of the last example/epoch tested is 100%. Turns yellow if < 100%.

1.3

2018-01-30

1. Training screen - new "Order" button toggles between Random and Sequential presentation order of training examples. Defaults to Random for which the training set is shuffled after each training epoch.

2. Training screen - new "Ep/sec" metric shows the training rate in #epochs per second.

3. Network screen - now allows up to 100 nodes per layer. The prior limit was 64.

4. Training screen - now shows each layer's node values in addition to the network weights.

5. Training and Testing screens - when running AutoPrune, MaxEp is doubled to avoid premature retraining failure.

2. Training screen - new "Ep/sec" metric shows the training rate in #epochs per second.

3. Network screen - now allows up to 100 nodes per layer. The prior limit was 64.

4. Training screen - now shows each layer's node values in addition to the network weights.

5. Training and Testing screens - when running AutoPrune, MaxEp is doubled to avoid premature retraining failure.

1.2

2017-12-22

1. Adds AutoPrune feature - iteratively prunes the smallest remaining weight and retrains the network, until the desired number of weights has been pruned, or until the target network accuracy on test data is reached.

2. Fixes a crash if Prune button is pressed without a network - checks for that and adds an error msg.

3. Updates a few other error msgs.

2. Fixes a crash if Prune button is pressed without a network - checks for that and adds an error msg.

3. Updates a few other error msgs.

1.1

2017-12-18

1. now supports 20char example names (was 10).

2. now keeps pruned weights clamped to 0, shortens re-training and makes the network easier to analyze.

3. improved a few error messages.

2. now keeps pruned weights clamped to 0, shortens re-training and makes the network easier to analyze.

3. improved a few error messages.

1.0

2017-12-12

Ways to hack NeuLab

- Redeem codes (Get the Redeem codes)

Download hacked APK

Download NeuLab MOD APK

Request a Hack

Ratings

3.6 out of 5

5 Ratings

Reviews

SpicyMelon123,

Finally An actual working neural network!

I have downloaded about 12 different apps trying to see if there is any app that actually just lets you but in numbers and get back numbers. Simple. But no app allowed it or you had to pay for it... Thank you for making this and making it so simple and sweet.

elite barbs,

Cool app

Neat features, easy to use and explore, very easy to see what is happening as the network trains itself

ndisjcjjdidjcjidjf,

I don’t know how to use the app

It is completely impossible to understand what I expect it wasn’t easy to use it